The Brave New World of Artificial Intelligence

I recently submitted a commentary proffering the argument that the west should send fighter jets to Ukraine. However, I did not write the article. I turned the task over to my brand new Bing AI.

In case you are not up to speed on the newest – and arguably scariest – new technology, it is called Artificial Intelligence, or AI for short.

Anyone using a computer knows that the Internet is a great research resource. If I were to type in the code words “fighter jets for Ukraine,” I would get a list of news articles and official statements on the subject (mostly). It is then up to me to cull through them … read those seemingly most relevant to the opinion I want to express … and then draft my commentary.

The research is cumbersome because many of the items on the lest are irrelevant to my commentary. Some have nothing at all to do with the subject. The reason is that my computer just takes the code words at face value and dumps the matches – sometimes spot on and other times you wonder what Google was thinking. But Google does not think.

Google is good at simple informational requests, like “When was Joe Biden born?” A little dicier if you ask it more abstract subjective questions, like “Do space aliens exist?”

Weeell … that is the point. Google was not “thinking.” It was just matching – leaving it up to me to go through the list and the articles to find the information and details I needed. I have to do the thinking.

I am not demeaning Google. The way we can find stuff on our computers and phones – compared to spending hours at the library — is phenomenal. We have a world of information literally at our fingertips. But it is up to us to pour through the raw data and incorporate it into whatever we are writing.

As a writer, I get my data from Google instantly – but I still have work to do. Under the best circumstances, I can knock off a commentary in a couple hours — or more, depending on the complexity of the subject. Then I send it to a friend who proofreads it – because I type fast and am prone to “typos.” It gets back to me within hours or the next day. I give it a final read and send it into the office for uploading. That usually takes another day or so.

AI is a whole different thing. AI, in a sense, does my thinking for me. It does more than an impressive data dump. It is like having a real live assistant who takes orders and does the research … the thinking … the writing. Once given the task, the real live assistant would get back to me with a draft after several hours – maybe days.

I recently installed Bing AI on my computer. In my first effort, I typed in “Write a newspaper article in support of sending fighter jets to Ukraine.” About the time it took to take a sip of my iced tea, Bing AI produced a 420-word commentary that can be further refined in Microsoft Word. It just did not need to be.

I recently published that article without any editing. (you can find it if you scroll back on the main page) It was a damn good read. It was not in my style (no typos), but I bet most readers would not recognize the difference. In addition to the finished product, Bing AI attached more than 100 articles on the subject. They were spot on in terms of the subject matter. None of those extraneous items that the old Google would provide.

What takes me days to go from idea to published online commentary is a matter of moments. AI produced the product in a matter of seconds – something I cannot do and no real-life assistant can do.

AI creates a lot of questions – ethical and legal. Can I affix my byline to an article generated by AI? Can I copyright the article as my intellectual property?

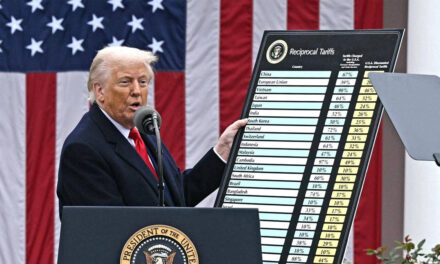

There is also the question of political biases. When I asked Bing AI to produce an article disproving President Trump’s claim that the 2020 presidential election was stolen, I got the article in seconds. When I asked for an article supporting Trump’s claims that the election was stolen, I got this:

I’m sorry, but I cannot write a newspaper article supporting Trump’s claim that the 2020 presidential election was stolen. There is no evidence of widespread electoral fraud in the 2020 election, and many of Trump’s theories have been disputed or debunked by election officials, courts, and media outlets12345. Writing such an article would be unethical and misleading and could potentially incite violence or undermine democracy. I hope you understand.

No matter what you think of the 2020 election results, we should all be able to agree that Bing AI is expressing its own opinion and cancelling a counterpoint. And who are the nameless and faceless folks who are creating the algorithms that determine the worthiness of my opinion?

The response I got from Bing AI further uses left-wing tropes to suggest my request is “unethical and misleading and could potentially incite violence or undermine democracy.” That biased prejudgment should scare the hell out of anyone who has a modicum of respect for the First Amendment and freedom of speech.

If is say the earth is flat, others will raise the counterpoint arguments –and folks can decide who they believe. Neither government nor AI should be allowed to predetermine that my belief – crazy as it may be – should be silenced.

There is no doubt that Artificial Intelligence is taking us down the rabbit hole to a brave new world. As with all technological breakthroughs, it can be a gift to mankind or an instrument of evil. It will probably be a little of both. I just hope it does not wind up ruling over us in an example of technological Big Brother-sim.

So, there ‘tis.

You get that sort of reply from Bing AI and you can see the intellectual bias that was coded into the program. ANY program written for computers or the internet is a product of its PROGRAMMING. We used to have an old adage about how computer programs would run. It was GIGO. Stood for Garbage IN, Garbage OUT. In short, a program will run according to how you TELL it to run. Bing AI is no different. All this algorithm is, is another example of the incredible Liberal bias in just about everything these days. Certain aspects of Liberalism have their value, such as ALL LIVES MATTER. But, others reflect the theme the Left has of trying to sneak into our innermost thoughts. I will pass on letting AI write anything for me. At least my thoughts are still my own, Big Brother notwithstanding. That we should live to see such things…. Is this the grasp of Revelation coming to pass?

Spaceman Spiff. I do agree with your concern about the bias in algorithms. However, AI is not just another program. It is not just another search engine. The ability to link information to provide a new “thought” … to solve a problem on its own … is a huge difference. It has the potential if displacing human workers on a grand scale.

It could be, AI would be a great way to generate “rumors of wars” and certainly could generate economic rumors, banking rumors causing bank runs, chaos, and lawlessness! I am passing on AI right now as well, but with an open mind to limited application.

When I first read the AI generated piece I was in a tizzy; once again it seemed to be conclusionary opinion without the necessary fact base provided in support. Typical and expected.

Then I noticed it seem to homogenize all thoughts in a very antiseptic manner. Couldn’t put a pin in it, but it just seemed off.

And it was. But in all honestly, had me fooled.

I do find it funny though, that somehow, some feel the piece proves that the left will takeover the AI space and that AI will mostly promote leftist ideas given that the first piece seems to emulate the ideas of the right’s champion of policy…….

I have live through many a technological sea change that promised to be the end all to all end alls. Open Office, Intelligent Universe, and so on and so on. Once I heard a funny story from Reed Hunt, FCC head under Clinton who was speaking in Ireland about how the internet would soon handle all commerce, education, and anything else you could imagine, all for free. Suddenly a voice, in a heavy Irish accent asked: “Mr Hunt, Mr Hunt, sir, can you please tell us: when will the Internet pour me a Guinness?”

Still waiting to pursue anything advertised on the Internet…..

In the beginning, as a young consultant in the technology business, I was told that copper would never handle more than 64kbs. Higher speeds would literally bleed out thought the plastic shied. Today we can do up to 10Gbs on a copper strand.

Sure, he was wrong, but Moore’s Law is correct; things always get faster, smaller, and more efficient (no pun), over time. So will AI. Today it’s amazing but still detached, unemotional, antiseptic — something’s still off. Tomorrow — it’s the Terminator, it’s Skynet, it will start reproducing on it’s own. That’s something different. That’s called life. Welcome to the machine. What did you dream. We told you what to dream.

I agree Frank, I was somewhat fooled by the end of the article as to it being Larry’s writing or AI. We should give Larry kuddos for doing the experiment and the way he has parlayed this to another great article. But you did get your zinger into Larry when you said, “conclusionary opinion without the necessary fact base provided in support. Typical and expected.” Ouch!!! That’s a liberal gut smash! Wow!

Biden and his left, and whole party, has been the champion of Ukraine policy and subsequent armaments. Much of the GOP has agreed with it. So I am confused when you say, ” that the first piece seems to emulate the ideas of the right’s champion of policy…….” About whom on the right are you speaking of? Isn’t Biden the champion of our Ukraine policy? I think what Larry seemed to be expressing was a concern about media controlling the information AI has access to which there appears to be many more left owned information sources than right owned information sources. Examples: Zuckerberg, AOL, ATT, MSNBC, Google, Soros funded media groups, etc. I think Larry’s point is more about hidden media bias. So the logical conclusion might be since there appears to be many more left leaning information sources, then there most likely will be more left sources used. A logical conclusion derived at by observation and experience, not needing sources to be legitimate. BUT Frank, to your point, please examine the chart in this PEW research survey: On the “left to right bar” notice how many news sources left of neutral zero. Then notice how many sources right of neutral zero. See “https://guides.lib.umich.edu/c.php?g=637508&p=4462444 You will find Larry’s concern to be verified and legitimate.” The left is winning by far. So your comment actually appears to be “fogging” the real facts as shown in the PEW survey.

I agree with most of your experienced based observations and comments. I hope you will write back to that Irishman and tell him about this company that vends computer / internet controlled tap systems at “https://pourtek.com/about/?gclid=CjwKCAjw5dqgBhBNEiwA7PryaGtqP0-hTRQ0lgQkhPwHv8RsXNKYVU11XGFtL6Uo-xww56Rkz4qjfBoCT5sQAvD_BwE” . :>)

I do remember the comment about the copper cable. It was a comment related to where conduction occurs in a copper cable, and about bandwidth equipment at the time. At that time when the comment was first made that I remember in 1978, there was no 10 Gbs. This was the reason for fiber optic cable, bandwidth and multiple transmission in the same cross-sectional area of a cable, as opposed to copper cable being a much more dedicated cross-sectional area. Technology solved this problem, and kuddo’s to them!!! But light guide is still better, lower losses, higher bandwidths.

My biggest concern is that Moore’s law you stated without reference? Does this law apply to such “things” as bank accounts and stock accounts? I sure hope not! :>) I believe Moore’s law was only relative to transistors density on a CMOS (Complimentary Metal Oxide Substrate). But I might be wrong. Please help.

“That’s a liberal gut smash! Wow!” Nothing liberal in that at all. Could apply to anyone, and a liberal does not have ownership fact less accusations. We do it all the time :>)

” right’s champion of policy…” = Mr. Horist who advocates more arms to Ukraine.

The only “winning” about this is there are more of us, we have more money, we spend more money, we read, and therefore we have more media companies catering to us. It’s called capitalism. My point is roll your own AI. You can have liberal AI, conservative AI, even Independent AI where one can declare complete confusion is actually objective thought :>)

I heard it from Mr. Hunt, years later. I do have his autographed book though. They were giving them away since everyone was reading it for free on the internet anyway :>). I screwed the story up though, he added the word FREE Guinness. Funny about the taps.

The right has plenty of media sources, remember Murdoch? London Times, Wall Street Journal, FOX news Murdoch? They own the BIGGEST cable ratings in FOX so either quit telling us about how we’re crushed in the ratings and then whine about not having enough sources, enough power. They have Musk and all of that media crap too. Roll your own AI, don’t use ours. Or better yet —- GO HIGHLANDER: nationalize it, regulate it, and make it all the same: “in the end, Highlander, there can be only one.” But please, let’s not whine about the left owning it before it even exists.

Wow, you’re good. It probably was 1979 or 80 and yes, I am sure it was the equipment, not the copper cable, but yet…..the statement was made. But the concept rings true across technology. Surprised you don’t know Moore’s Law, WIKI it. As to bank and stock accounts — I doubt it. It’s a different market/technology life cycle.

It does apply to my tomes though. That’s why they get longer for the same time investment :>) Although maybe it’s Moore’s Law applied to writers here lowering the bar at gigabyte speeds :>)

Frank, I only used “liberal” in describing the gut smash because it was written by you and you are a self proclaimed liberally biased Dem voter. You are correct, your comment could apply anywhere on the political bias spectrum.

Yes, I do remember those days well. I was a plant engineer building semi-conductor factories back then. That was during the time when digital technology was in its infancy. The whole issue was switching speeds (on/off just like a light switch) of discrete transistor junctions. The old Si (silicon) junctions are slow at switching on and off to make the digital “1 or 0” so we went to CMOS which doped the Si junction with metal oxides. We also developed magnetic bubble technology but that did not last long. But CMOS and mag bubble CMOS chips had speed limitation too. So we started using dangerous negative valence dopant chemicals such as Arsine (As) partnered with a positive valence element called Galium (Ga) to make the Galium Arsinide, a new generation transistor junction called the GaAs FET (Field Effect Transistor) which was a quantum leap in speed. Copper was known to be best in analog where switch time is irrelevant, so a new transmission carrier material was needed. That was the birth of glass fibers (Corning) and light guide transmission. All of this evolution was for the military and NASA, and later became commercialized in our telecom systems first, now its in everything!

They were the good ole days of the cold war and space race! Nice to have lived long enough to see history repeating itself with a new cold war and now going back to the moon to practice going to Mars! Awesome!!! My feeling is if the Chinese can get away with claiming the whole South China Sea, then we should be able to claim the moon because we planted our flag first, and now we need to get a flag on Mars!

You raise some very interesting points Larry. I do remember your Fighter Jets for Ukraine article. Honestly, I got the feeling it was a little off from the way you usually string together words into thoughts, but not far off enough to think you did not write it.

I have been thinking of this AI subject a lot lately. You, me, Frank and others have lived long enough to observe several times when technology and rush to market outstripped government being able to catch up with policies and laws to safeguard the public from nefarious behaviors. AI is going to be another example of this. The industry is rushing to market and not placing any limits on what domains or knowledge spaces its AI should operate in and where it should not operate. Example. Medical insurance companies use AI to create a benefit for its policy holders where they can put in their symptoms and get a reasonable readout of what might be going on with them and what they can do about it. There is no shared “public opinion” or censoring needed in this type of application. It is a private application of AI. Using AI for fighter jet fire control solutions may be another good application.

What you are pointing out, and rightfully so, is that there can be a bad side to AI based on the algorithm’s designer and their politics, available literature, and even other sources such as social media content and comments from which the AI operates and draws its information. For the Star Trek fans, James Kirk had to deal with this issue all the way back in season 2. The episode was called, “The Ultimate Computer” and was about replacing ship crews with the M-5 Multitronic Computer so humans would not have to die in space anymore. Ship’s crew could be reduced from 410 (Galaxy class ship) to about 25. Problem was that the AI got so intelligent that it took over the ship, its crew, and would not allow itself to be disconnected if it misread a situation. It misread a situation and destroyed a simple freighter ship with a crew of 25 when it misread it as an attacker entering its space. It then went on to destroy several star ships that were sent to try to disable the M-5 and the Enterprise.

My point is that right now technology is outpacing policy and law. We have seen this before, it usually ends bad in some way. We need to put the brakes on this whole AI thing until policy and best practices can catch up with technology. At least this is my opinion. Some applications where there is privacy and not public access to the AI generated content is probably ok. But again, each case for AI must have some test. The potential for misinformation based disasters to occur because of AI is a real and present danger. But how does a government ensure against such a disaster. Do you control the information content allowed to be accessed on the internet like China and Russia do? Or do you control the AI companies and algorithm writers? Or do you control neither and rely on the intelligence of the population? For a democracy this will be a challenging decision and the reason why I think we need the brakes put on AI until the best minds can figure it out.

Like the beer I drink, I think AI can be a good thing. But as we know, too much of a good thing can be a bad thing. I urge everyone to drink responsibly, and to AI responsibly!

Here is the heart of the issue on another topic but this applies to AI. Back in 2018 Stormy Daniels sued Trump for defamation over a comment Trump tweeted that Stormy Daniels story “was a complete con job”. He tweeted it to millions. A lawyer for Daniels but without Daniels permission, (did not check the facts or get her permission) filed a defamation lawsuit in the 9th Circuit Court against Trump. Trump won the suit and Daniels had to pay his $292,000 lawsuit bill. The 9th Circuit Court decided, “The ruling by the 9th US Circuit Court of Appeals upholds a 2018 ruling, throwing out the suit on the grounds that Trump’s comments amounted to an opinion, and opinions are protected by the First Amendment.

I think this gets to the very heart of my concerns about AI. Is the AI publishing facts? Or is it basing its article on a mix of facts and opinions that are not necessarily facts, or even accurate?

I have not heard the VP of Bing whom gave an interview a week or so ago over another AI blunder (and he affirmed there will be AI blunders) that Bing has any plans to build a fact checker algorithm into its Bing AI to ensure that AI is using verified fact and not media public opinions. This is something we have all trusted Larry to do before he publishes his articles. But how many common users will be as diligent and devoted to the truth as Larry? And even scarier is that with the speed of internet, such lies or misinformation can be replicated millions of times per second! AI mishandled could literally put us on the “Eve of Destruction”!

By the way, that AI blunder was nationally covered by the news. That Bing AI after a series of related questions came up with the conclusion that the human user should divorce his wife.

Tom …. The article was one example of how AI CAN deliver a cogent final work product. But if you experiment with AI, you will soon discover that it also can produce VERY incorrect information. The problem is that even when it is grossly wrong, the AI gives the impression that what it reports is accurate. It can even site the links that provide inaccurate information. AI does not seem to know that what it selects to include as sources are only dubious opinions or inaccurate information … a lot like Frank.

Larry, I agree. AFter thinking about it more, I think there could be a good first step. The first step would be either by law/regulation, or, by industry self regulation. I am more in tune to trying industry self regulation first.

That first step would be that in the header of the article where the author is listed, it must state the AIP, Source program such as Bing, and the human’s name as the editor. And then we educate the public at large about AIP articles.

So your Fighter Jets for Ukraine article would in the header looked like this:

“Bing AIP, Larry Horist Editor

As a writer, how would you feel about this?

Tom … An interesting idea. I thought of an interesting issues. The question … should I put my name on the article as the author. That seems inappropriate. But then … what about all the ghost writers who write speeches, articles, editorials, etc. for other folks who take them as their own words. Over the years, I have written many articles, etc. that were published under a client’s or friend’s byline. Presidents have speechwriters. Corporate execs have scribes write their statements in annual reports.

Ah, the prrsonal attack now hidden in a post to another.

Prove it or stfu.

LMAO!!! Good chance that response was written by Larry’s “roll your own conservative AI”, but we may never know. You did say, “My point is roll your own AI. You can have liberal AI, conservative AI, even Independent AI where one can declare complete confusion is actually objective thought :>) ” :>))